We’ve documented how to follow Routing Patterns and Resilience Patterns to manage routing and communications for thousands of microservices on your platform. But the production environment is way more complicated than that because many practical problems need to be addressed, such as isolating traffic, regression testing before releasing, switching traffic to new deployments, or testing A/B versions. We will discuss all these problems as below patterns in two articles like before. I define them as Deployment Patterns because basically all of them can be solved by deploying your applications or services as different isolated sets of instances. Let's get started with the first two patterns.

- Traffic Channel / Multiple Tenants Traffic

- Testing In Production / Pre-release

- Canary Release

- A/B Testing

Traffic Channel / Multiple Tenants Traffic

Questions To Solve

How do we isolate the different kinds of traffic to segregate the performance degradation caused by the high pressure of a specific type of traffic from each other?

Common Design

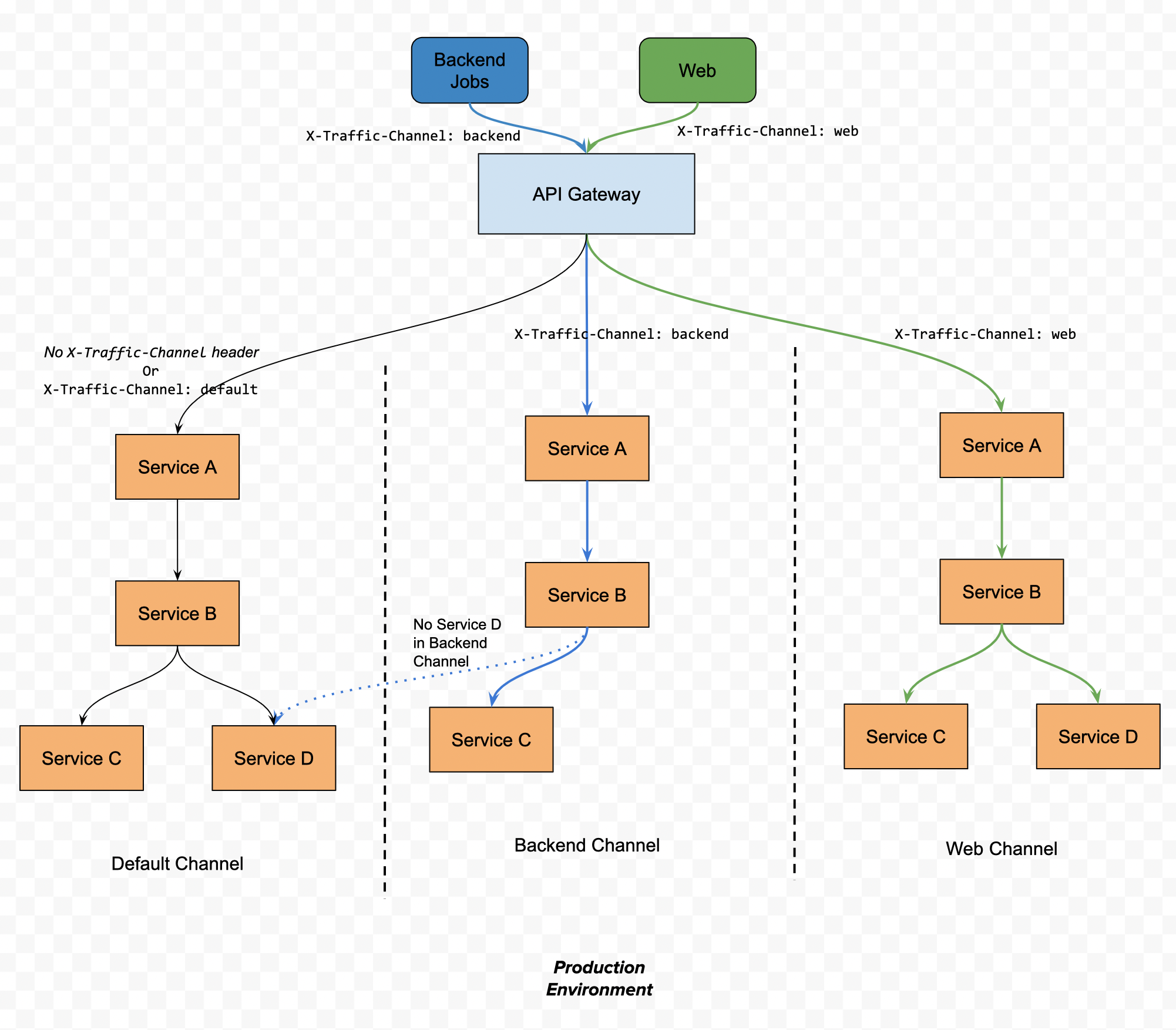

We need to split different kinds of traffic to different isolated environments correspondingly. For example, your backend ETL jobs would write a lot of domain data to the services managing that domain data at a particular time every day. You don’t want the performance degradation triggered by this activity to propagate to the user query requests from the website at the service level. Figure 1 shows one such example.

We could leverage Dynamic Service Routing to route the traffic to different sets of instances of a specific service based on the data of individual requests. We define one of those sets of instances as a Channel. An extensive HTTP header, X-Traffic-Channel, needs to be adopted to label where an individual request should be routed. Then, the Service Routing component routes the request to the corresponding channel as the header value is declared. Of course, the client-side services must claim this HTTP header, and this header must be propagated across all the downstream service calls as they flow through the system.

Figure 1 Header-based Traffic Channel

Implementation in App Mesh

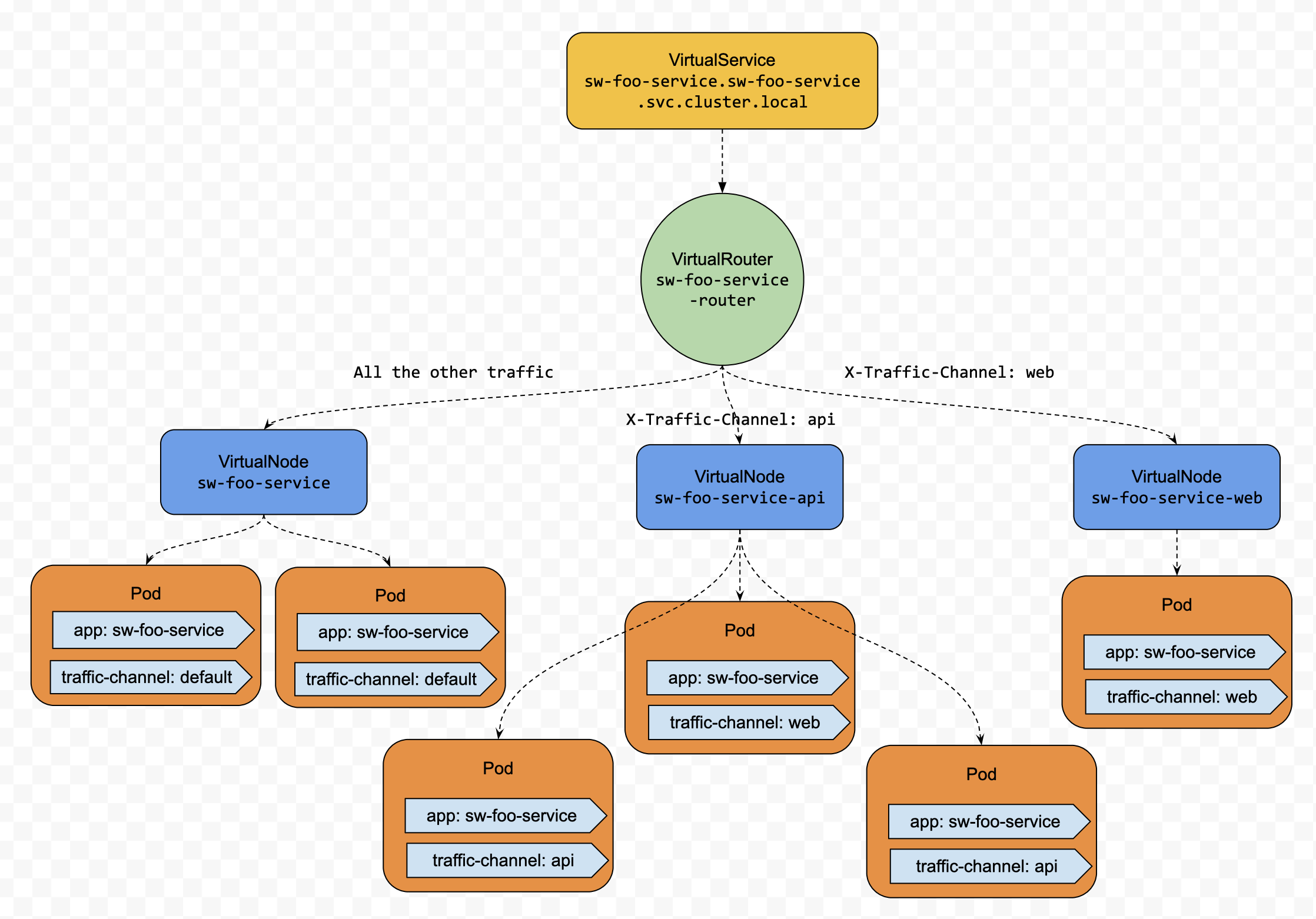

It is harnessed to isolate different types of traffic and route each one to a dedicated set of instances for a microservice. Then each group would serve traffic separately, and its performance would not be degraded by each other at the computing resources level. To implement this pattern, we need to,

- Establish the routing strategy for each service, which would be that

- Create a VirtualNode for each channel. For example, if we have three channels, such as Web, API, and Default, we could create 3 VirtualNodes for them accordingly.

- Exposing that service as one VirtualService, all the clients only need to request that service through the FQDN of the VirtualService with or without the traffic channel header,X-Traffic-Channel.

- Create a VirtualRouter for that VirtualService, add Traffic Channel routing rules as VirtualRoutes to that VirtualRouter, which would route the traffic based on the traffic channel header to one of the VirtualNodes we created above - Schedule pods of different channels to different worker nodes by leveraging the Kubernetes Deployment Node Selector.

Let’s use sw-foo-service as an example. We will create three channels for sw-foo-service. If no dedicated channels serve some types of traffic, the route will route them to default channels.

Firstly, let’s see what K8s native resources we need. On the one hand, we must have a general Service without label selectors, which will get a cluster local FQDN from K8s. Our App Mesh VirtualService resource will have the same name, the awsName field, with that FQDN to intercept the network traffic targeting that service.

#FQDN: sw-foo-service.sw-foo-service.svc.cluster.local

apiVersion: v1

kind: Service

metadata:

name: sw-foo-service

namespace: sw-foo-service

spec:

ports:

- protocol: TCP

port: 8080

On the other hand, three different traffic channels mean that three kinds of traffic must be served separately. So we need to deploy ours sw-foo-service as three isolated sets of instances. Given that we could use K8s Deployment resource to deploy a group of service instances, we need 3 Deployment resources with different label values, as shown above. Also, we need nodeSelector to tell K8s to schedule Pods to other worker nodes for these three different Deployments.

# The default channel Deployment

apiVersion: apps/v1

kind: Deployment

metadata:

name: sw-foo-service-web

namespace: sw-foo-service

spec:

replicas: 2

selector:

matchLabels:

app: sw-foo-service

traffic-channel: default

template:

metadata:

labels:

app: sw-foo-service

traffic-channel: default

spec:

containers:

- name: sw-foo-service

image: sw-foo-service-ecr:BUILD-29

ports:

- containerPort: 8080

env:

- name: "SERVER_PORT"

value: "8080"

- name: "COLOR"

value: "blue"

nodeSelector:

traffic-channel: default

---

# The web channel Deployment

apiVersion: apps/v1

kind: Deployment

metadata:

name: sw-foo-service-web

namespace: sw-foo-service

spec:

replicas: 3

selector:

matchLabels:

app: sw-foo-service

traffic-channel: web

template:

metadata:

labels:

app: sw-foo-service

traffic-channel: web

spec:

containers:

- name: sw-foo-service

image: sw-foo-service-ecr:BUILD-29

ports:

- containerPort: 8080

env:

- name: "SERVER_PORT"

value: "8080"

- name: "COLOR"

value: "blue"

nodeSelector:

traffic-channel: web

---

# The api channel Deployment

apiVersion: apps/v1

kind: Deployment

metadata:

name: sw-foo-service-api

namespace: sw-foo-service

spec:

replicas: 5

selector:

matchLabels:

app: sw-foo-service

traffic-channel: api

template:

metadata:

labels:

app: sw-foo-service

traffic-channel: api

spec:

containers:

- name: sw-foo-service

image: sw-foo-service-ecr:BUILD-29

ports:

- containerPort: 8080

env:

- name: "SERVER_PORT"

value: "8080"

- name: "COLOR"

value: "blue"

nodeSelector:

traffic-channel: api

Secondly, let’s see what App Mesh virtual resources we need. We need 1 VirtualService as an entry point for all kinds of traffic, 1 VirtualRouter with 3 VirtualRoutes as a router for routing different traffic to different sets of instances, and 3 VirtualNodes as three groups of endpoints for instances.

# 1 VirtualService

apiVersion: appmesh.k8s.aws/v1beta2

kind: VirtualService

metadata:

name: app-foo-service

namespace: app-foo-service

spec:

awsName: sw-foo-service.app-foo-service.svc.cluster.local

provider:

virtualRouter:

virtualRouterRef:

name: app-foo-service-router

---

# 1 VirtualRouter with 3 VirtualRoutes

apiVersion: appmesh.k8s.aws/v1beta2

kind: VirtualRouter

metadata:

name: sw-foo-service-router

namespace: sw-foo-service

spec:

listeners:

- portMapping:

port: 8080

protocol: http

routes:

- name: web-channel-route

httpRoute:

match:

prefix: /

headers:

- name: X-Traffic-Channel

match:

exact: web

action:

weightedTargets:

- virtualNodeRef:

name: sw-foo-service-web

weight: 1

- name: api-channel-route

httpRoute:

match:

prefix: /

headers:

- name: X-Traffic-Channel

match:

exact: api

action:

weightedTargets:

- virtualNodeRef:

name: sw-foo-service-api

weight: 1

- name: default

httpRoute:

match:

prefix: / # default match with no priority

action:

weightedTargets:

- virtualNodeRef:

name: sw-foo-service

---

# 1 VirtualNode for default channel

apiVersion: appmesh.k8s.aws/v1beta2

kind: VirtualNode

metadata:

name: sw-foo-service

namespace: sw-foo-service

spec:

podSelector:

matchLabels:

app: sw-foo-service

listeners:

- portMapping:

port: 8080

protocol: http

healthCheck:

...

serviceDiscovery:

awsCloudMap:

namespaceName: foo.prod.softwheel.aws.local

serviceName: sw-foo-service

attributes:

- key: traffic-channel

value: default

---

# 1 VirtualNode for web channel

apiVersion: appmesh.k8s.aws/v1beta2

kind: VirtualNode

metadata:

name: sw-foo-service-web

namespace: sw-foo-service

spec:

podSelector:

matchLabels:

app: sw-foo-service

traffic-channel: web

listeners:

- portMapping:

port: 8080

protocol: http

healthCheck:

...

serviceDiscovery:

awsCloudMap:

namespaceName: foo.prod.softwheel.aws.local

serviceName: sw-foo-service

attributes:

- key: traffic-channel

value: web

---

# 1 VirtualNode for api channel

apiVersion: appmesh.k8s.aws/v1beta2

kind: VirtualNode

metadata:

name: sw-foo-service-api

namespace: sw-foo-service

spec:

podSelector:

matchLabels:

app: sw-foo-service

traffic-channel: api

listeners:

- portMapping:

port: 8080

protocol: http

healthCheck:

...

serviceDiscovery:

awsCloudMap:

namespaceName: foo.prod.softwheel.aws.local

serviceName: sw-foo-service

attributes:

- key: traffic-channel

value: api

Testing In Production / Pre Release

Questions To Solve

How to do the end-to-end integration testing against one service in a production environment?

Common Design

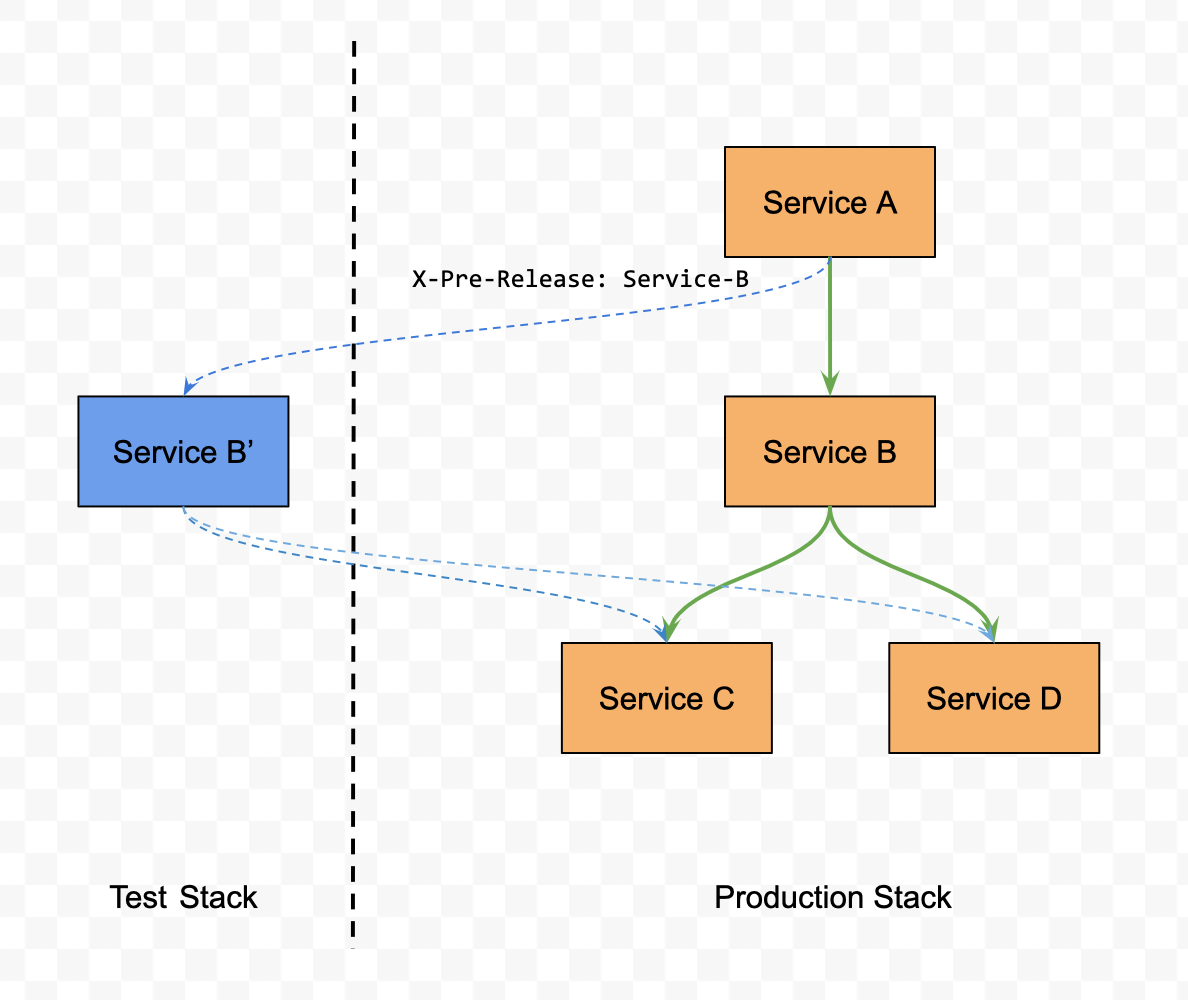

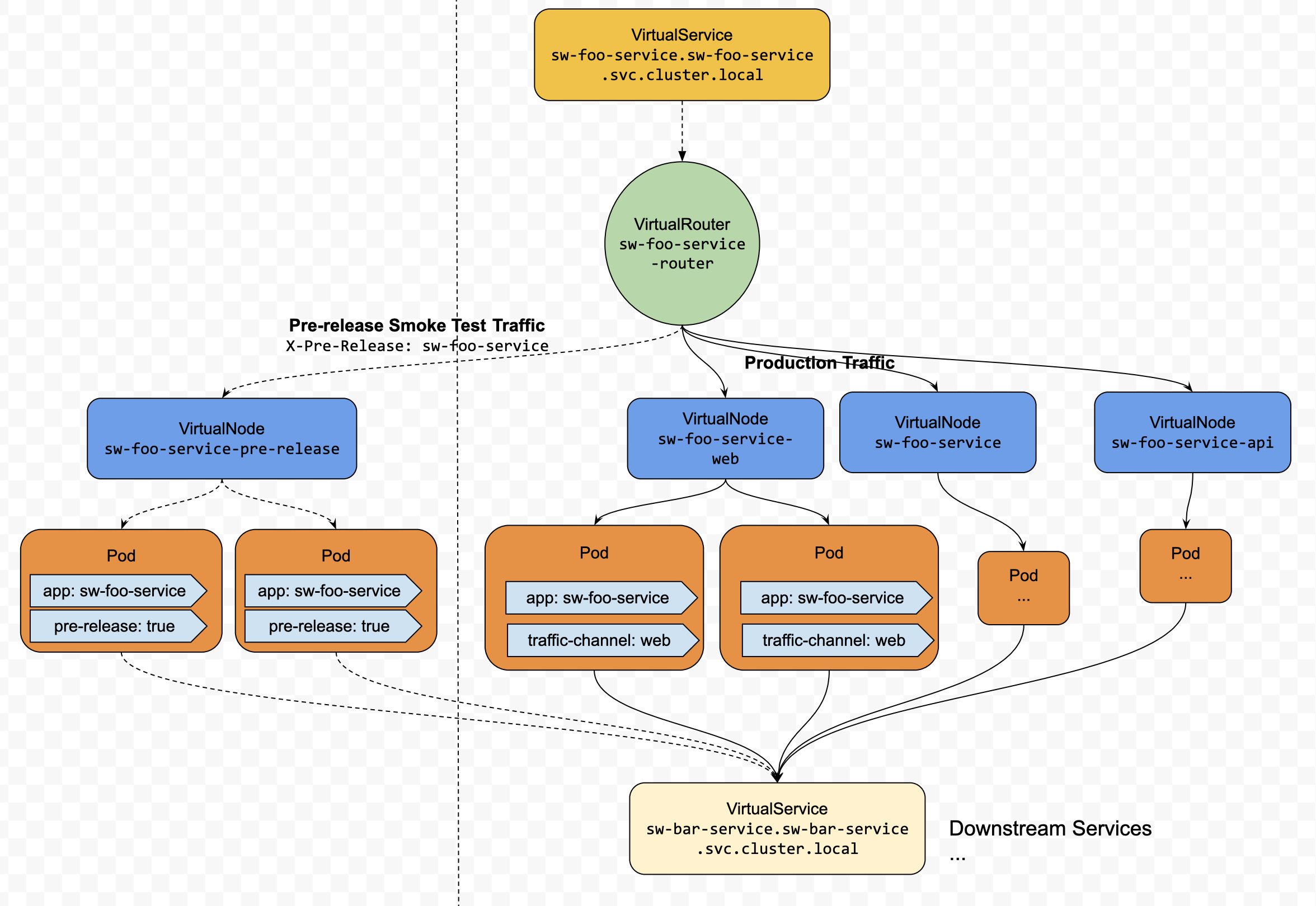

The microservice architecture breaks down a complex monolithic software system into more minor, more manageable services, which can be built and deployed independently with each other. However, this flexibility makes it hard to release each service without breaking down the whole system. So we must have a solution to test the entire system against the service that needs to be released alongside the other production services. Figure 2 shows one such example.

We could also leverage Dynamic Service Routing to implement this pattern based on an extensive HTTP Header, like X-Pre-Release. Firstly, we deploy the new version of the service in an isolated stack with production environment configurations in parallel with the production version of that service. Then when we run the smoke tests of end-to-end functionalities, we specify the header as X-Pre-Release: Service-B. When the Service Routing component received the requests with that header, it would route the traffic to the test version service, Service B’. Otherwise, it would route the traffic to the production service, Service B.

Figure 2 Test new or updated microservices alongside production services

Implementation in App Mesh

This is another feature that can be implemented by Header-based Dynamic Routing. To implement this pattern, we need to,

- Create a Deployment for each service, which would deploy the test version of the service in a few replications and label the instances as

pre-release: true. - Create a VirtualNode for each service for the Deployment in the last step, which would expose those instances to be discovered by the additional Service Discovery Attribute,

key: pre-release&value: true. - Create a VirtualRoute for each service, which would match the

X-Pre-Releaseheader and the header value is the name of that service, and whose provider is the VirtualNode in the last step.

Then we could run all of the e2e smoke tests with the header, X-Pre-Release: ${service-name}after which we could scale the deployment in Step.1 to zero or delete it.

Firstly, let's see what the K8s Deployment resource looks like to deploy this testing version of code as an isolated set of instances.

apiVersion: apps/v1

kind: Deployment

metadata:

name: sw-foo-service-pre-release

namespace: sw-foo-service

spec:

replicas: 2

selector:

matchLabels:

app: sw-foo-service

pre-release: true

template:

metadata:

labels:

app: sw-foo-service

pre-release: true

spec:

containers:

- name: sw-foo-service

image: sw-foo-service-ecr:BUILD-29

ports:

- containerPort: 8080

env:

- name: "SERVER_PORT"

value: "8080"

- name: "COLOR"

value: "blue"

Secondly, which is more important, let’s look at how to use App Mesh virtual resources to implement this pattern.

# 1 VirtualService as an entry point of all traffic

apiVersion: appmesh.k8s.aws/v1beta2

kind: VirtualService

metadata:

name: app-foo-service

namespace: app-foo-service

spec:

awsName: sw-foo-service.sw-foo-service.svc.cluster.local

provider:

virtualRouter:

virtualRouterRef:

name: app-foo-service-router

---

# 1 VirtualRouter with 1 more VirtualRoute before default one

apiVersion: appmesh.k8s.aws/v1beta2

kind: VirtualRouter

metadata:

name: sw-foo-service-router

namespace: sw-foo-service

spec:

listeners:

- portMapping:

port: 8080

protocol: http

routes:

- name: web-channel-route

...

- name: pre-release-route

httpRoute:

match:

prefix: /

headers:

- name: X-Pre-Release

match:

exact: sw-foo-service

action:

weightedTargets:

- virtualNodeRef:

name: sw-foo-service-pre-release

weight: 1

- name: default

httpRoute:

match:

prefix: / # default match with no priority

action:

weightedTargets:

- virtualNodeRef:

name: sw-foo-service

weight: 1

---

apiVersion: appmesh.k8s.aws/v1beta2

kind: VirtualNode

metadata:

name: sw-foo-service-web

namespace: sw-foo-service

spec:

podSelector:

matchLabels:

app: sw-foo-service

pre-release: true

listeners:

- portMapping:

port: 8080

protocol: http

healthCheck:

...

serviceDiscovery:

awsCloudMap:

namespaceName: foo.prod.softwheel.aws.local

serviceName: sw-foo-service

attributes:

- key: pre-release

value: true

Wrap Up

We discussed how to leverage the following Deployment Patterns to build a robust production-ready Microservice Architecture. The first one is to isolate multiple kinds of traffic to segregate performance degradation caused by a specific type of high-pressure traffic. And the second one is for establishing the ability to do end2end integration testing against one service but based on all the other services in a production environment.

- Traffic Channel / Multiple Tenants Traffic

- Testing In Production / Pre Release

I will have a second blog to talk about the other deployment patterns shortly. Thanks a lot for reading this blog. See you soon!